Testing, Testing, 1-2-AI: Automate Your Software Tests

Why AI is Revolutionizing Quality Assurance

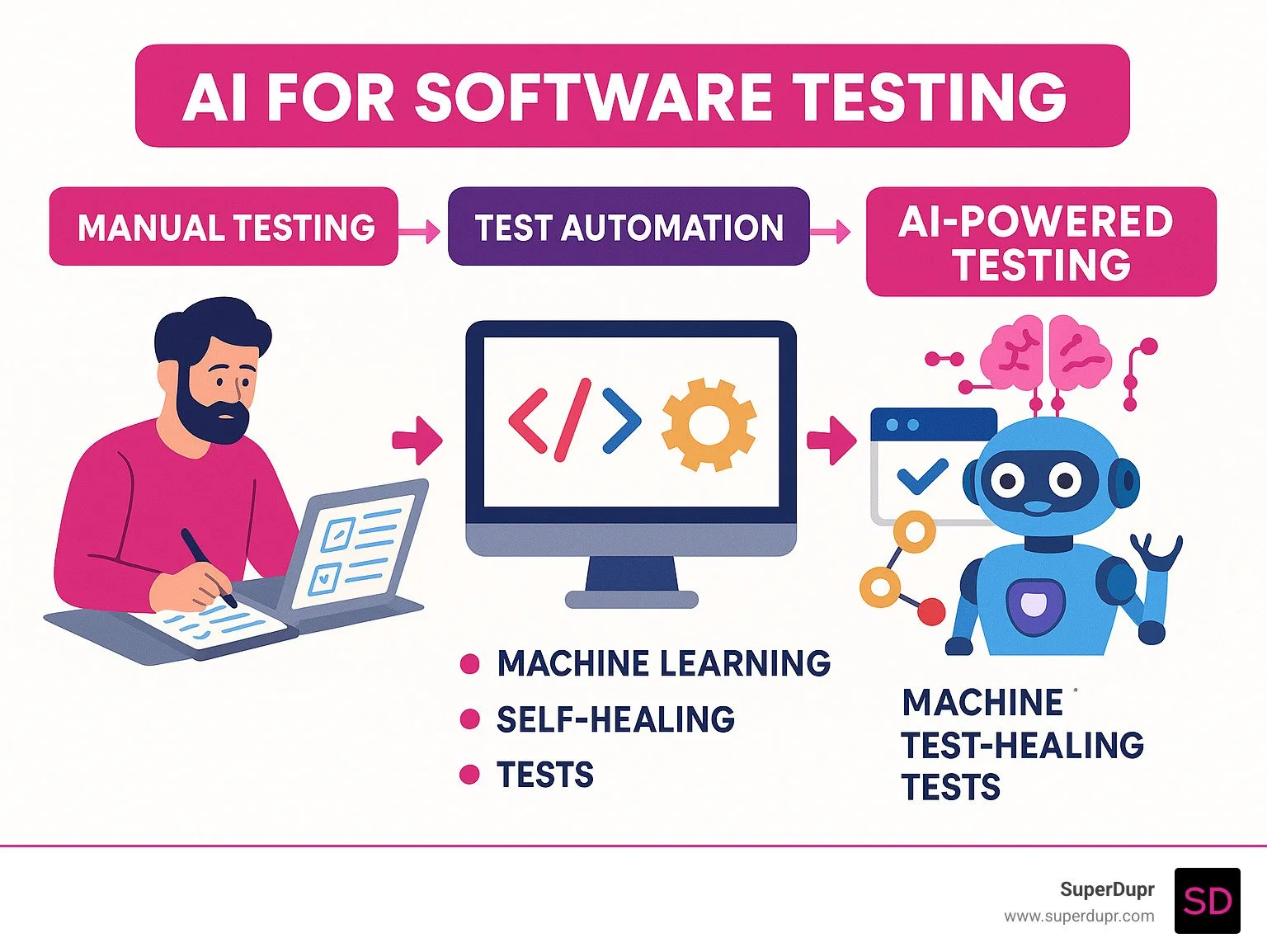

AI for software testing is changing how teams catch bugs, reduce maintenance overhead, and ship higher-quality software faster. Instead of manually writing test scripts or maintaining brittle automation, AI-powered tools can generate test cases, predict defects, and self-heal broken tests automatically.

Key AI Testing Capabilities:

Test Generation: AI analyzes your app and creates comprehensive test suites automatically

Self-Healing: Tests adapt to UI changes without manual script updates

Defect Prediction: Machine learning identifies high-risk code areas before bugs occur

Visual Testing: Computer vision detects UI regressions across devices and browsers

Maintenance Reduction: Studies show up to 95% less time spent fixing broken tests

The numbers tell the story: 70% of manual QA tasks can be automated with AI, and teams report 80% cost savings compared to traditional automation frameworks. With 45% of software released without proper security checks and 60% of teams spending 10+ hours weekly on test maintenance, AI isn't just helpful—it's becoming essential.

As Justin McKelvey, I've guided SuperDupr through implementing AI for software testing solutions that have transformed our clients' release cycles and quality outcomes. My experience optimizing digital processes has shown me how AI testing accelerates time-to-market while reducing the technical debt that slows down growing businesses.

Why AI Changes Software Testing

Let's be honest—traditional testing struggles to keep pace with modern release cycles. Manual testing catches obvious issues but is slow, and classic automation breaks whenever the UI shifts. AI for software testing flips the script by learning from data, prioritizing risk, and adapting as your product evolves.

Core Advantages

Defect prediction: ML combs through commit history, code complexity, and past bugs to spotlight high-risk areas.

Self-healing automation: When a locator changes, AI finds the element another way—no more brittle scripts.

Low-code test creation: Describe a scenario in plain English; the platform converts it into an executable test.

Continuous, risk-based testing: AI runs the right tests at the right time, cutting execution time by up to 70 %.

Security & edge-case findy: Dynamic fuzzing generates inputs humans would never dream up.

Key Benefits at a Glance

Metric Typical Gain Manual tasks automated ~70 % Test authoring time ↓ 95 % Cost savings ↑ 80 % Coverage increase 34 % → 90 % Critical bugs in prod ↓ 60 %

Limitations & Ethical Watch-outs

Data privacy (GDPR/HIPAA), model bias, and the need for human oversight still apply. Use anonymized or synthetic data, perform bias audits, and keep clear governance around when AI vs. humans make decisions.

Implementing AI for Software Testing: Step-By-Step

You don’t have to scrap your current pipeline—just layer AI on top.

Pipeline integration: Plug AI tools into your CI/CD so tests run automatically and results feed your dashboards.

Targeted test generation: Start with mission-critical user flows (checkout, onboarding). AI observes patterns and produces additional cases over time.

Visual AI & API intelligence: Computer vision spots UI drift across browsers; ML-driven API tests create realistic data and watch for contract changes.

Performance monitoring: AI learns normal response times and flags anomalies long before users notice.

Prepare Your Data & Environment

Track everything in version control—tests, configs, even model versions.

Feed the AI cleaned historical defect data; patterns emerge fast.

When real data is off-limits, generate synthetic data that mimics production.

Use GPU cloud resources so heavy visual and model training jobs don’t hog your build servers.

Plug-In & Configure AI Engines

Self-healing locators: Train once; they adapt forever.

Anomaly detection & smart alerts: Calibrate thresholds to avoid noise.

Actionable dashboards: Risk scores, coverage gaps, and fix suggestions—not just pass/fail counts.

Scale & Monitor in Production

Flaky-test recovery: AI retries or quarantines suspect cases automatically.

AI-powered release gates: Combine risk scores and historical data for smarter go/no-go calls.

Continuous learning: New prod incidents feed back into the model, making tomorrow’s tests better.

AI for Software Testing vs. Traditional & Manual QA

Here's something that might surprise you: AI for software testing isn't trying to replace human testers or traditional automation. Instead, it's creating a powerful partnership where each approach handles what it does best.

Think of it like a restaurant kitchen. Manual testing is your head chef—creative, intuitive, and perfect for tasting dishes and making judgment calls. Traditional automation is your prep cook—reliable and fast at repetitive tasks once you show them the recipe. AI for software testing is like having a sous chef who learns from both, anticipates what's needed next, and handles the complex coordination.

Risk-based prioritization shows where AI really shines. While human testers naturally focus on the obvious trouble spots, AI digs through mountains of historical data to spot patterns we'd never notice. It might find that features added on Fridays tend to have more bugs, or that certain code patterns predict performance issues.

But human creativity remains absolutely essential. When users find unexpected ways to break your app (and they always do), it's human testers who think outside the box. They're the ones who ask "What if someone tries to upload a 50MB image as their profile picture?" or notice that a button placement feels awkward.

Exploratory testing becomes boostd when humans and AI work together. AI can suggest unexplored areas based on coverage gaps, while human testers bring the curiosity and domain knowledge to actually investigate those areas meaningfully.

The automation coverage difference is remarkable. Traditional automation typically maxes out around 30-50% coverage because maintaining scripts becomes overwhelming. With AI handling the maintenance burden, teams routinely achieve 80-90% coverage without the usual headaches.

Aspect Manual Testing Traditional Automation AI Testing Speed Slow Fast Very Fast Coverage Limited Moderate Extensive Maintenance None High Low Creativity High None Limited Cost High ongoing High setup Moderate Reliability Variable High Very High

When Humans Beat Machines

Usability insights require something AI simply can't replicate—human empathy. You can teach AI to detect if a button works, but only humans can tell you if clicking that button feels satisfying or frustrating. Does the loading animation make users feel confident or anxious? AI has no clue.

Domain knowledge gives human testers a massive advantage in catching business logic errors. If you're testing banking software, an experienced tester knows that certain transaction combinations might violate regulatory requirements—knowledge that would take enormous effort to encode into AI systems.

Ethical judgment becomes crucial when testing features that affect real people's lives. Should this recommendation algorithm prioritize engagement or user wellbeing? Is this data collection practice actually serving users? These questions require human values and moral reasoning that AI can't provide.

When Machines Beat Humans

Massive data sets represent AI's natural habitat. While a human tester might analyze dozens of scenarios before getting overwhelmed, AI happily processes thousands of test cases, looking for patterns across every possible combination of inputs and conditions.

Regression suites are where AI really flexes its muscles. Running the same 500 tests across 12 different browser configurations? Humans find this mind-numbing. AI finds it Tuesday.

Overnight execution means your tests never sleep. While your team rests, AI runs comprehensive test suites, analyzes results, and has fresh insights waiting when everyone arrives in the morning. It's like having a tireless team member who's always making progress.

At SuperDupr, we've found the sweet spot isn't choosing between these approaches—it's orchestrating them intelligently. AI handles the heavy lifting and pattern recognition, traditional automation covers the reliable repetitive tasks, and human testers focus on creativity, judgment, and user experience. This combination delivers better results than any single approach could achieve alone.

Selecting Your AI Testing Tech Stack

With dozens of options, focus on what solves your pain points.

• Open-source frameworks (e.g., Selenium + AI add-ons) = maximum control, higher setup effort.

• Visual engines excel at pixel-perfect UIs and responsive layouts.

• Low-code platforms let non-developers write tests in natural language.

• AI-driven API monitors watch live traffic and auto-generate regression cases.

Budget & Skills

Tool licenses are only half the cost—cloud compute and training matter too. Upskill your QA team early; a short workshop often beats months of trial-and-error.

Evaluation Checklist

Map features to real pain points.

Verify CI/CD, ticketing, and chat integrations.

Gauge the learning curve for each user role.

Check data-export options to avoid lock-in.

Confirm industry compliance needs up-front.

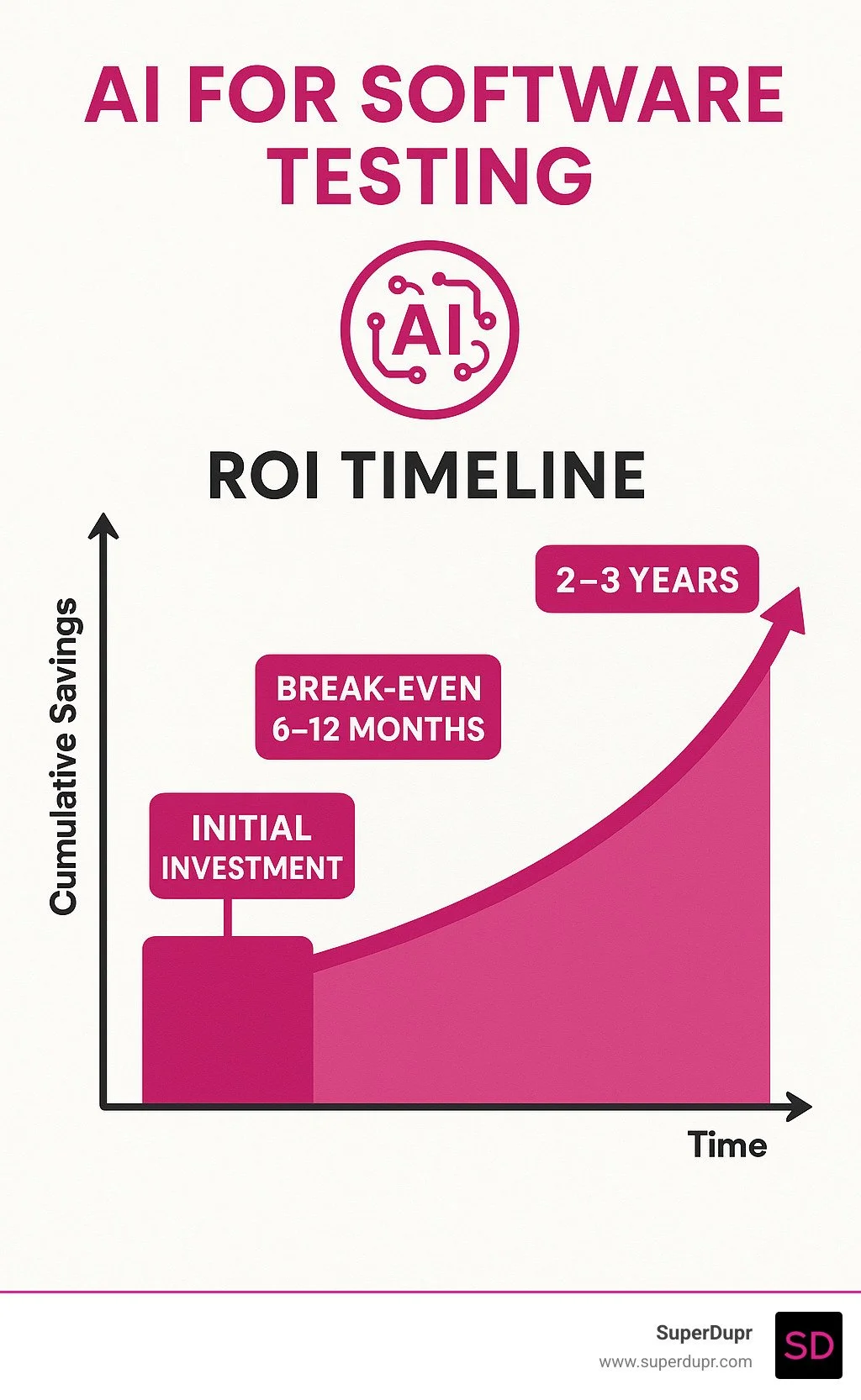

Proof-of-Concept & ROI

Measure creation time, maintenance hours, and bug catch-rate before vs. after AI.

Track coverage delta—most pilots jump from ~40 % to 70 % in weeks.

Factor infra + training costs; most teams break even inside 6–12 months.

Frequently Asked Questions about AI-Driven QA

How long does it take to see ROI from AI testing?

You'll likely see your investment pay off within 6-12 months of implementing AI for software testing. The exact timeline depends on how mature your current testing setup is, your team size, and how complex your applications are.

The good news? You don't have to wait a full year to see benefits. Most teams notice improvements within 2-3 months as self-healing features cut down on maintenance headaches and automated test generation starts covering more ground. The savings really add up over time as AI systems get smarter and learn your applications better.

I've watched clients go from spending 10+ hours every week fixing broken tests to less than 2 hours within their first quarter. That's real time back in your team's hands—time they can spend on actually improving your product instead of babysitting test scripts.

The beauty of AI testing ROI is that it compounds. Those early wins fund your next improvements, creating a positive cycle that keeps delivering value long after your initial investment.

Will AI replace QA engineers?

Absolutely not—and I can't stress this enough. AI for software testing makes QA engineers more powerful, not obsolete. Think of AI as the ultimate testing sidekick that handles the boring, repetitive stuff so your team can focus on the interesting challenges.

Here's what's actually happening: AI crushes repetitive tasks and spots patterns humans might miss, but human judgment remains irreplaceable. Your QA engineers are still the ones designing test strategies, evaluating user experience, and making sense of what AI finds.

The role is definitely evolving, though. Instead of manually clicking through the same test cases over and over, QA professionals are becoming test strategists and AI trainers. They're spending more time on exploratory testing, usability evaluation, and creative problem-solving—the stuff that actually makes products better.

In my experience at SuperDupr, we've seen something surprising: AI actually increases demand for skilled QA professionals. Companies want people who can harness AI tools effectively and interpret the insights they generate. QA engineers who accept AI become more valuable, not less.

Which tests should I automate first with AI?

Start with your regression test suites—those tests you run over and over to make sure new changes don't break existing features. These are perfect for AI because they have clear pass/fail criteria and provide immediate value while your team gets comfortable with the technology.

Next, focus on critical user journeys that directly impact your bottom line. These are the workflows that absolutely cannot break—think user registration, checkout processes, or core feature functionality. AI can generate comprehensive coverage for these scenarios much faster than manual approaches.

Visual testing offers some of the quickest wins, especially if you have complex user interfaces. AI can catch layout issues and visual regressions that human eyes often miss during manual testing. This is particularly valuable for applications that need to work across different browsers and devices.

Here's what I'd avoid at first: don't jump into edge cases or highly complex scenarios until your AI for software testing foundation is solid. Start with the straightforward stuff, build confidence in your tools, and let your team gain experience before tackling the tricky cases.

The key is starting small and building momentum. Once you see AI handling your basic regression tests flawlessly, you'll have the confidence and knowledge to tackle more ambitious automation projects.

Conclusion

The future of quality assurance is here, and AI for software testing is leading the charge. What started as experimental technology has evolved into proven solutions that deliver real results—faster releases, better coverage, and happier development teams.

Looking ahead, we're moving toward autonomous testing agents that truly understand your applications. These smart systems will design their own test strategies, adapt to changes instantly, and maybe even predict issues before they happen. It's like having a crystal ball for your code quality.

Quantum computing might sound like science fiction, but it's closer than you think. Imagine testing scenarios so complex that today's computers can't even process them. That's the kind of breakthrough that could revolutionize how we think about software quality.

Here at SuperDupr, we've watched AI for software testing transform how our clients ship software. The numbers don't lie—teams save thousands of hours, catch more bugs, and deploy with confidence. It's not just about faster testing; it's about building better products that users actually love.

The real question isn't whether AI testing works. We know it does. The question is how quickly you can get started. Every day you wait is another day your competitors might be pulling ahead with smarter, faster testing approaches.

Your development team is already stretched thin. Your release cycles keep getting shorter. Your users expect perfection. AI for software testing isn't just a nice-to-have anymore—it's becoming essential for staying competitive.

Ready to see what AI can do for your testing process? More info about our services shows how SuperDupr helps growing businesses implement AI solutions that actually work. We focus on quick wins and measurable results, because your time and budget matter.

The future of software testing is smarter, faster, and more reliable. And it's available right now.